Paper Interaction Tool (Link)

Main conference program guide (Link)

Dataset Contributions table can be found (here)

2-page "At-a-Glance" summary (Link)

Workshop/Tutorial Program (Link)

At a Glance Workshop/Tutorials (link)

06/16 Virtual links will be emailed sometime on Friday 6/17 as well as posted here. There will be a virtual POSTER SESSION held on June 28th at 10:00 AM CDT and 10:00 PM CDT. Authors can choose to attend either or both for additional q&a. The papers present will be available here.

All Times are based on US Central Time Zone

Registration Hours:

Saturday 6/18 4PM – 6PM

Sunday 6/19 7AM – 5PM

Monday 6/20 7AM – 5PM

Tuesday 6/21 7AM – 5PM

Wednesday 7:30 AM – 4PM

Thursday 8AM – 2PM

Friday 8AM – 2PM

Registration will be in the Mosaic Lounge just inside the Julia Street entrance of the convention center. Please have your CLEAR or SafeExpo app updated and your QR code or confirmation number ready for quicker badge retrieval. If you did not complete the CLEAR or SafeExpo, please remember to bring your valid PCR test results and check-in at the far end of the registration counters. Reminder – there will be NO BADGE reprints. Anyone caught sharing CVPR badges in-person or online will be expelled from CVPR.

Program Overview

|

Main Conference |

Tuesday, June 21 - Friday, June 24, 2022 |

|

Workshops & Tutorials |

Sunday, June 19 & Monday, June 20, 2022 |

Paper Sessions https://docs.google.com/spreadsheets/d/1ilb2Wqea0uVKxlJsdJHR-3IVaWeqsWyk2M2aGmYVAPE

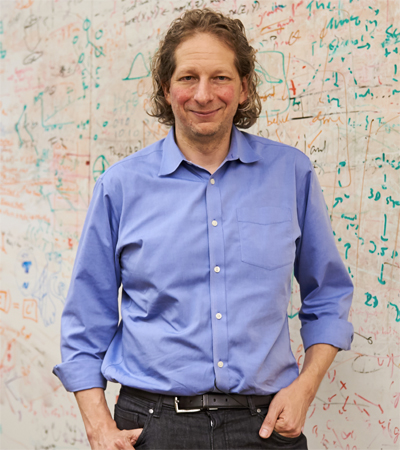

KEYNOTE Tuesday, June 21 5:00PM CDT |

Josh Tennebaum Professor Department of Brain and Cognitive Sciences, Massachusetts Institute of Technology

|

Josh Tenenbaum is Professor of Computational Cognitive Science at the Massachusetts Institute of Technology in the Department of Brain and Cognitive Sciences, the Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Center for Brains, Minds and Machines (CBMM). He received a BS from Yale University (1993) and a PhD from MIT (1999). His long-term goal is to reverse-engineer intelligence in the human mind and brain, and use these insights to engineer more human-like machine intelligence. In cognitive science, he is best known for developing theories of cognition as probabilistic inference in structured generative models, and applications to concept learning, causal reasoning, language acquisition, visual perception, intuitive physics, and theory of mind. In AI, he and his group have developed widely influential models for nonlinear dimensionality reduction, probabilistic programming, and Bayesian unsupervised learning and structure discovery. His current research focuses on the development of common sense in children and machines, common sense scene understanding in humans and machines, and models of learning as program synthesis. His work has been recognized with awards at conferences in Cognitive Science, Philosophy and Psychology, Computer Vision, Neural Information Processing Systems, Reinforcement Learning and Decision Making, and Robotics. He is the recipient of the Troland Research Award from the National Academy of Sciences (2012), the Howard Crosby Warren Medal from the Society of Experimental Psychologists (2015), the R&D Magazine Innovator of the Year (2018), and a MacArthur Fellowship (2019), and he is an elected member of the American Academy of Arts and Sciences.

Title: |

KEYNOTE Wednesday, June 22 5:00PM CDT |

Xuedong Huang Technical Fellow Chief Technology Officer Azure AI

|

Dr. Xuedong Huang is a Technical Fellow in the Cloud and AI group at Microsoft and the Chief Technology Officer for Azure AI. Huang oversees the Azure Cognitive Services team and manages researchers and engineers around the world who are building artificial intelligence-based services to power intelligent applications. He has held a variety of responsibilities in research, incubation, and production to advance Microsoft’s AI stack from deep learning infrastructure to enabling new experiences. In 1993, Huang joined Microsoft to establish the company's speech technology group, where he led Microsoft’s spoken language efforts for over a few decades. In addition to bringing speech recognition to the mass market, Huang led his team in achieving historical human parity milestones in speech recognition, machine translation, conversational question answering, machine reading comprehension, image captioning and commonsense language understanding. Huang’s team provides AI-based Azure Vision, Speech, Language and Decision services that power popular world-wide applications from Microsoft like, Office 365, Dynamics, Xbox and Teams to numerous 3rd party services and applications. Huang is a recognized executive and scientific leader from his contributions in the software and AI industry. He is an Institute of Electrical and Electronics (IEEE) and Association for Computer Machinery (ACM) Fellow. Huang was named Asian American Engineer of the Year (2011), Wired Magazine's 25 Geniuses Who Are Creating the Future of Business (2016), and AI World's Top 10 (2017). Huang holds over 170 patents and has published over 100 papers and two books. Before Microsoft, Huang received his PhD in Electrical Engineering from University of Edinburgh and was on the faculty at Carnegie Mellon University. He is a recipient of the Allen Newell Award for Research Excellence. Title: Abstract : In this keynote, I will share our progress on Integrative AI, a multi-lingual, multi-modal approach addressing this challenge using a holistic, semantic representation to unify various tasks in speech, language, and vision. To apply Integrative AI to computer vision, we have been developing a foundation model called Florence, which introduces the concept of a semantic layer through large-scale image and language pretraining. Our Florence model distills visual knowledge and reasoning into an image and text transformer to enable zero-shot and few-shot capabilities for common computer vision tasks such as recognition, detection, segmentation, and captioning. Through bridging the gap between textural representation and various vision downstream tasks, we not only achieved state-of-the-art results on dozens of benchmarks such as ImageNet-1K zero-shot, COCO segmentation, VQA and Kinetcs-600, but also discovered novel results of image understanding. We are encouraged by these preliminary successes and believe we have only scratched the surface of Integrative AI. As language is at the core of human intelligence, I foresee that the semantic layer will empower computer vision to go beyond visual perception and connect pixels seamlessly to the core of human intelligence: intent, reasoning, and decision. |

KEYNOTE Thursday, June 23 5:00PM CDT |

Kavita Bala Dean Ann S. Bowers College of Computing and Information Science,Cornell University

|

Kavita Bala is the dean of Cornell Ann S. Bowers College of Computing and Information Science at Cornell University. Bala received her S.M. and Ph.D. from the Massachusetts Institute of Technology (MIT). Before becoming dean, she served as the chair of the Cornell Computer Science department. Bala leads research in computer vision and computer graphics in recognition and visual search; material modeling and acquisition using physics and learning; physically based scalable rendering; and perception. She co-founded GrokStyle, a visual recognition AI company, which drew IKEA as a client, and was acquired by Facebook in 2019. Bala is the recipient of the SIGGRAPH Computer Graphics Achievement Award (2020) and the IIT Bombay Distinguished Alumnus Award (2021), and she is an Association for Computing Machinery (ACM) Fellow (2019) and Fellow of the SIGGRAPH Academy (2020). Bala has received multiple Cornell teaching awards (2006, 2009, 2015). Bala has authored the graduate-level textbook “Advanced Global Illumination” and has served as the Editor-in-Chief of Transactions on Graphics (TOG). Title: Abstract: We use visual input to understand and explore the world we live in: the shapes we hold, the materials we touch and feel, the activities we do, the scenes we navigate, and more. With the ability to image the world, from micron resolution in CT images to planet-scale satellite imagery, we can build a deeper understanding of the appearance of individual objects, and also build our collective understanding of world-scale events as recorded through visual media. |

PANEL Friday, June 24 5:00 PM

Embodied Computer Vision

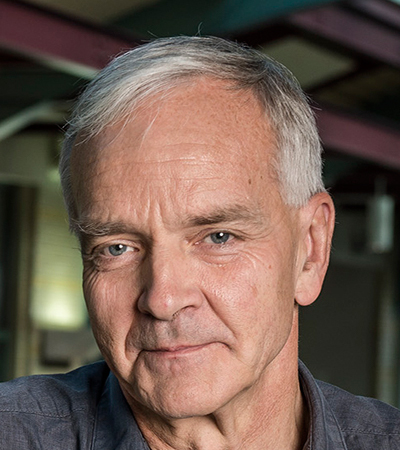

Moderator: Martial Hebert (CMU)

|

Martial Hebert is University Professor at Carnegie Mellon University, where is currently Dean of the School of Computer Science. His research interests include computer vision, machine learning for computer vision, 3-D representations and 3-D computer vision, and autonomous systems. |

Kristen Grauman (UT Austin, Meta AI)

|

Kristen Grauman is a Professor in the Department of Computer Science at the |

Nicholas Roy (Zoox, MIT)

|

Nicholas Roy is a Principal Software Engineer at Zoox and the Bisplinghoff Professor of Aeronautics & Astronautics at MIT. He received |

Michael Ryoo (Stony Brook University, Google)

|

Michael S. Ryoo is a SUNY Empire Innovation Associate Professor in the Department of Computer Science at Stony Brook University, and is also a staff research scientist at Robotics at Google. His research focuses on video representations, self-supervision, neural architectures, egocentric perception, as well as robot action policy learning. He previously was an assistant professor at Indiana University Bloomington, and was a staff researcher within the Robotics Section of NASA's Jet Propulsion Laboratory (JPL). He received his Ph.D. from the University of Texas at Austin in 2008, and B.S. from Korea Advanced Institute of Science and Technology (KAIST) in 2004. His paper on robot-centric activity recognition at ICRA 2016 received the Best Paper Award in Robot Vision. He provided a number of tutorials at the past CVPRs (including CVPR 2022/2019/2018/2014/2011) and organized previous workshops on Egocentric (First-Person) Vision. |

PC Hosts:

Kristin Dana (Rutgers University, Steg AI)

Dimitris Samaras, (Stony Brook University)

This year CVPR is celebrating Juneteenth in a city with outsize significance in black history. As a nod to the black artistic and musical tradition of New Orleans CVPR is working with a local artist to feature a special screen print on the back of the conference t-shirt.

|

|

Self-taught artist M. Sani was born in Cameroon, also known as the “hinge of Africa.” He left his country to pursue his dream of sharing his art with the world and settled briefly in Lafayette, Louisiana, before moving to New Orleans. But after Katrina hit, he was forced to close his art gallery and moved to St Louis, Missouri. Five years later, he re-opened the art gallery on the famed Royal Street in the French Quarter of New Orleans as he is not going to give up on his dream. His work has been featured at the Festival National des Arts et de la Culture (FENAC) in Cameroon, where he represented the Adamawa region in Ngaoundéré and Ebolowa. He also has had art placements in the New Orleans Jazz and Heritage Festival and other premier festivals. M. Sani is a working artist in an ever-changing art culture

His work has been described as bold, abstract, and primordial. “My inspirations come from my dreams and nature,” M. Sani says. “Each of my paintings tells a history. You just need to observe and listen.” He uses oil, acrylic, mixed-media, and collage techniques. His process includes, dripping, squeezing, and splashing paint. He says he sometimes paints on reclaimed wood from old historic houses. His work combines the culture of Cameroon and New Orleans. He likes to make people think with his work. “Some important themes such as the spiritual influence of religions, tribal ceremonies, and music are present in my work,” M. Sani says. When M. Sani says he’s working on his musicians, he is referring to his All That Jazz, Let’s Jazz it Up, and Colors of Jazz collections. The collections often feature silhouettes of musicians playing jazz into the early hours of the morning. M. Sani is looking to turn his gallery into an art space and hopes to bring back the “Little Artists Session,” a workshop for kids who want to express themselves creatively. He is also looking into selling his work virtually as NFTs.